In this blog, we will show how to create a new user in openLDAP using Mule LDAP connector.

This flow has been tested against a Mac openLDAP server, using the core schema. Also we need to setup an LDAP tree like ou=people,dc=example,dc=com .

Here is the flow we are using,

- Receive create user request via HTTP GET request (only passing in dn, distinguished name)

- Look up dn from LDAP and return the lookup result

- If the lookup fails by a NameNoFoundException, the Exception flow is invoked

- A Java transform pulls out the dn and fills in a set of pre-defined user data (for demo purpose) and puts them in a map

- LDAP transformer transforms the map into an LDAPEntry

- LDAP connector is called to insert newly created LDAPEntry into LDAP

But first, we need to install LDAP connector by going to Mule Studio/Help/Install New Software. Select "MuleStudio Cloud Connectors Update Site" and search for "ldap". Check "LDAP Connector Mule Studio Extension" and proceed to install it.

Next we need to configure an LDAP global element, by clicking on Global Elements' "Add" button.

Search for "ldap" and select "LDAP" under "Cloud Connectors", and click "OK".

Fill in "Name", "Principal DN", "Password", and "URL", click OK.

Here is the picture of the Mule flow. The normal flow returns if the LDAP exists in the system. The exception flow is called when the lookup fails and the addition of LDAP user is executed.

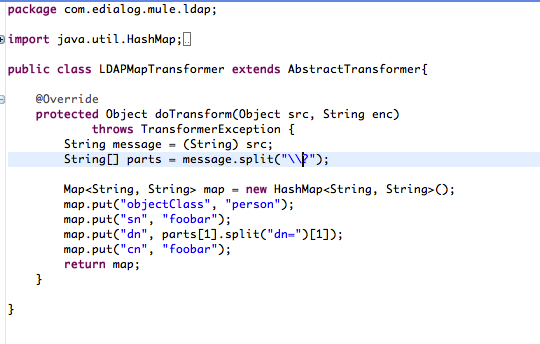

Here is the Java code for the Java LDAP to Map transformer. This is for demo purpose so a lot of hard coded information is used,

To test this flow, enter the following URL,

http://localhost:8082/ldap?dn=cn=ldapUser,ou=people,dc=example,dc=com

This causes a new LDAP user to be created under ou=people,dc=example,dc=com.

We can verify this by looking at the Mule flow output,

log4j: Trying to find [log4j.xml] using context classloader sun.misc.Launcher$AppClassLoader@1feed786.

log4j: Trying to find [log4j.xml] using sun.misc.Launcher$AppClassLoader@1feed786 class loader.

log4j: Trying to find [log4j.xml] using ClassLoader.getSystemResource().

log4j: Trying to find [log4j.properties] using context classloader sun.misc.Launcher$AppClassLoader@1feed786.

log4j: Using URL [jar:file:/Users/legu/mule-resources/MuleStudio/plugins/org.mule.tooling.server.3.3.2.ee_1.3.2.201212121942/mule/tooling/tooling-support-3.3.1.jar!/log4j.properties] for automatic log4j configuration.

log4j: Reading configuration from URL jar:file:/Users/legu/mule-resources/MuleStudio/plugins/org.mule.tooling.server.3.3.2.ee_1.3.2.201212121942/mule/tooling/tooling-support-3.3.1.jar!/log4j.properties

log4j: Parsing for [root] with value=[INFO, console].

log4j: Level token is [INFO].

log4j: Category root set to INFO

log4j: Parsing appender named "console".

log4j: Parsing layout options for "console".

log4j: Setting property [conversionPattern] to [%-5p %d [%t] %c: %m%n].

log4j: End of parsing for "console".

log4j: Parsed "console" options.

log4j: Parsing for [com.mycompany] with value=[DEBUG].

log4j: Level token is [DEBUG].

log4j: Category com.mycompany set to DEBUG

log4j: Handling log4j.additivity.com.mycompany=[null]

log4j: Parsing for [org.springframework.beans.factory] with value=[WARN].

log4j: Level token is [WARN].

log4j: Category org.springframework.beans.factory set to WARN

log4j: Handling log4j.additivity.org.springframework.beans.factory=[null]

log4j: Parsing for [org.apache] with value=[WARN].

log4j: Level token is [WARN].

log4j: Category org.apache set to WARN

log4j: Handling log4j.additivity.org.apache=[null]

log4j: Parsing for [org.mule] with value=[INFO].

log4j: Level token is [INFO].

log4j: Category org.mule set to INFO

log4j: Handling log4j.additivity.org.mule=[null]

log4j: Parsing for [org.hibernate.engine.StatefulPersistenceContext.ProxyWarnLog] with value=[ERROR].

log4j: Level token is [ERROR].

log4j: Category org.hibernate.engine.StatefulPersistenceContext.ProxyWarnLog set to ERROR

log4j: Handling log4j.additivity.org.hibernate.engine.StatefulPersistenceContext.ProxyWarnLog=[null]

log4j: Finished configuring.

INFO 2013-02-08 16:17:51,201 [main] org.mule.module.launcher.application.DefaultMuleApplication:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+ New app 'ldap_test' +

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

INFO 2013-02-08 16:17:51,203 [main] org.mule.module.launcher.application.DefaultMuleApplication:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+ Initializing app 'ldap_test' +

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

INFO 2013-02-08 16:17:51,382 [main] org.mule.lifecycle.AbstractLifecycleManager: Initialising RegistryBroker

INFO 2013-02-08 16:17:51,546 [main] org.mule.config.spring.MuleApplicationContext: Refreshing org.mule.config.spring.MuleApplicationContext@48ff4cf: startup date [Fri Feb 08 16:17:51 EST 2013]; root of context hierarchy

WARN 2013-02-08 16:17:52,585 [main] org.mule.config.spring.parsers.assembly.DefaultBeanAssembler: Cannot assign class java.lang.Object to interface org.mule.api.AnnotatedObject

INFO 2013-02-08 16:17:53,363 [main] org.mule.lifecycle.AbstractLifecycleManager: Initialising model: _muleSystemModel

WARN 2013-02-08 16:17:53,446 [main] org.springframework.beans.GenericTypeAwarePropertyDescriptor: Invalid JavaBean property 'port' being accessed! Ambiguous write methods found next to actually used [public void org.mule.endpoint.URIBuilder.setPort(java.lang.String)]: [public void org.mule.endpoint.URIBuilder.setPort(int)]

INFO 2013-02-08 16:17:53,525 [main] org.mule.lifecycle.AbstractLifecycleManager: Initialising connector: connector.http.mule.default

INFO 2013-02-08 16:17:53,591 [main] org.mule.construct.FlowConstructLifecycleManager: Initialising flow: LDAP_ADD_IF_NOT_EXISTS

INFO 2013-02-08 16:17:53,591 [main] org.mule.exception.CatchMessagingExceptionStrategy: Initialising exception listener: org.mule.exception.CatchMessagingExceptionStrategy@4cfed6f7

INFO 2013-02-08 16:17:53,599 [main] org.mule.processor.SedaStageLifecycleManager: Initialising service: LDAP_ADD_IF_NOT_EXISTS.stage1

INFO 2013-02-08 16:17:53,610 [main] org.mule.config.builders.AutoConfigurationBuilder: Configured Mule using "org.mule.config.spring.SpringXmlConfigurationBuilder" with configuration resource(s): "[ConfigResource{resourceName='/Users/legu/MuleStudio/workspace/.mule/apps/ldap_test/LDAP Create USER if not exist.xml'}]"

INFO 2013-02-08 16:17:53,610 [main] org.mule.config.builders.AutoConfigurationBuilder: Configured Mule using "org.mule.config.builders.AutoConfigurationBuilder" with configuration resource(s): "[ConfigResource{resourceName='/Users/legu/MuleStudio/workspace/.mule/apps/ldap_test/LDAP Create USER if not exist.xml'}]"

INFO 2013-02-08 16:17:53,610 [main] org.mule.module.launcher.application.DefaultMuleApplication: Monitoring for hot-deployment: /Users/legu/MuleStudio/workspace/.mule/apps/ldap_test/LDAP Create USER if not exist.xml

INFO 2013-02-08 16:17:53,612 [main] org.mule.module.launcher.application.DefaultMuleApplication:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+ Starting app 'ldap_test' +

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

INFO 2013-02-08 16:17:53,619 [main] org.mule.util.queue.TransactionalQueueManager: Starting ResourceManager

INFO 2013-02-08 16:17:53,621 [main] org.mule.util.queue.TransactionalQueueManager: Started ResourceManager

INFO 2013-02-08 16:17:53,623 [main] org.mule.transport.http.HttpConnector: Connected: HttpConnector

{

name=connector.http.mule.default

lifecycle=initialise

this=12133926

numberOfConcurrentTransactedReceivers=4

createMultipleTransactedReceivers=true

connected=true

supportedProtocols=[http]

serviceOverrides=<none>

}

INFO 2013-02-08 16:17:53,623 [main] org.mule.transport.http.HttpConnector: Starting: HttpConnector

{

name=connector.http.mule.default

lifecycle=initialise

this=12133926

numberOfConcurrentTransactedReceivers=4

createMultipleTransactedReceivers=true

connected=true

supportedProtocols=[http]

serviceOverrides=<none>

}

INFO 2013-02-08 16:17:53,623 [main] org.mule.lifecycle.AbstractLifecycleManager: Starting connector: connector.http.mule.default

INFO 2013-02-08 16:17:53,626 [main] org.mule.module.ldap.agents.DefaultSplashScreenAgent:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+ DevKit Extensions (1) used in this application +

+ LDAP 1.0.1 (DevKit 3.3.1 Build UNNAMED.1297.150f2c9)+ +

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

INFO 2013-02-08 16:17:53,627 [main] org.mule.lifecycle.AbstractLifecycleManager: Starting model: _muleSystemModel

INFO 2013-02-08 16:17:53,628 [main] org.mule.construct.FlowConstructLifecycleManager: Starting flow: LDAP_ADD_IF_NOT_EXISTS

INFO 2013-02-08 16:17:53,628 [main] org.mule.processor.SedaStageLifecycleManager: Starting service: LDAP_ADD_IF_NOT_EXISTS.stage1

INFO 2013-02-08 16:17:53,634 [main] org.mule.transport.http.HttpConnector: Registering listener: LDAP_ADD_IF_NOT_EXISTS on endpointUri: http://localhost:8082/ldap

INFO 2013-02-08 16:17:53,639 [main] org.mule.transport.service.DefaultTransportServiceDescriptor: Loading default response transformer: org.mule.transport.http.transformers.MuleMessageToHttpResponse

INFO 2013-02-08 16:17:53,641 [main] org.mule.lifecycle.AbstractLifecycleManager: Initialising: 'null'. Object is: HttpMessageReceiver

INFO 2013-02-08 16:17:53,646 [main] org.mule.transport.http.HttpMessageReceiver: Connecting clusterizable message receiver

INFO 2013-02-08 16:17:53,650 [main] org.mule.lifecycle.AbstractLifecycleManager: Starting: 'null'. Object is: HttpMessageReceiver

INFO 2013-02-08 16:17:53,650 [main] org.mule.transport.http.HttpMessageReceiver: Starting clusterizable message receiver

INFO 2013-02-08 16:17:53,652 [main] org.mule.module.launcher.application.DefaultMuleApplication: Reload interval: 3000

INFO 2013-02-08 16:17:53,698 [main] org.mule.module.management.agent.WrapperManagerAgent: This JVM hasn't been launched by the wrapper, the agent will not run.

INFO 2013-02-08 16:17:53,731 [main] org.mule.module.management.agent.JmxAgent: Attempting to register service with name: Mule.ldap_test:type=Endpoint,service="LDAP_ADD_IF_NOT_EXISTS",connector=connector.http.mule.default,name="endpoint.http.localhost.8082.ldap"

INFO 2013-02-08 16:17:53,732 [main] org.mule.module.management.agent.JmxAgent: Registered Endpoint Service with name: Mule.ldap_test:type=Endpoint,service="LDAP_ADD_IF_NOT_EXISTS",connector=connector.http.mule.default,name="endpoint.http.localhost.8082.ldap"

INFO 2013-02-08 16:17:53,733 [main] org.mule.module.management.agent.JmxAgent: Registered Connector Service with name Mule.ldap_test:type=Connector,name="connector.http.mule.default.1"

INFO 2013-02-08 16:17:53,735 [main] org.mule.DefaultMuleContext:

**********************************************************************

* Application: ldap_test *

* OS encoding: MacRoman, Mule encoding: UTF-8 *

* *

* Agents Running: *

* DevKit Extension Information *

* Clustering Agent *

* JMX Agent *

**********************************************************************

INFO 2013-02-08 16:17:53,736 [main] org.mule.module.launcher.DeploymentService:

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

+ Started app 'ldap_test' +

++++++++++++++++++++++++++++++++++++++++++++++++++++++++++++

INFO 2013-02-08 16:18:29,991 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.module.ldap.ldap.api.jndi.LDAPJNDIConnection: Binded to ldap://localhost with simple authentication as cn=admin,dc=example,dc=com

ERROR 2013-02-08 16:18:29,993 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.module.ldap.ldap.api.jndi.LDAPJNDIConnection: Lookup failed.

javax.naming.NameNotFoundException: [LDAP: error code 32 - No Such Object]; remaining name 'cn=ldapUser,ou=people,dc=example,dc=com'

at com.sun.jndi.ldap.LdapCtx.mapErrorCode(LdapCtx.java:3092)

at com.sun.jndi.ldap.LdapCtx.processReturnCode(LdapCtx.java:3013)

at com.sun.jndi.ldap.LdapCtx.processReturnCode(LdapCtx.java:2820)

at com.sun.jndi.ldap.LdapCtx.c_getAttributes(LdapCtx.java:1312)

at com.sun.jndi.toolkit.ctx.ComponentDirContext.p_getAttributes(ComponentDirContext.java:213)

at com.sun.jndi.toolkit.ctx.PartialCompositeDirContext.getAttributes(PartialCompositeDirContext.java:121)

at com.sun.jndi.toolkit.ctx.PartialCompositeDirContext.getAttributes(PartialCompositeDirContext.java:109)

at javax.naming.directory.InitialDirContext.getAttributes(InitialDirContext.java:123)

at javax.naming.directory.InitialDirContext.getAttributes(InitialDirContext.java:118)

at org.mule.module.ldap.ldap.api.jndi.LDAPJNDIConnection.lookup(LDAPJNDIConnection.java:465)

at org.mule.module.ldap.LDAPConnector.lookup(LDAPConnector.java:480)

at org.mule.module.ldap.processors.LookupMessageProcessor$1.process(LookupMessageProcessor.java:130)

at org.mule.module.ldap.process.ProcessCallbackProcessInterceptor.execute(ProcessCallbackProcessInterceptor.java:18)

at org.mule.module.ldap.process.ManagedConnectionProcessInterceptor.execute(ManagedConnectionProcessInterceptor.java:63)

at org.mule.module.ldap.process.ManagedConnectionProcessInterceptor.execute(ManagedConnectionProcessInterceptor.java:21)

at org.mule.module.ldap.process.RetryProcessInterceptor.execute(RetryProcessInterceptor.java:68)

at org.mule.module.ldap.connectivity.ManagedConnectionProcessTemplate.execute(ManagedConnectionProcessTemplate.java:33)

at org.mule.module.ldap.processors.LookupMessageProcessor.process(LookupMessageProcessor.java:116)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.DefaultMessageProcessorChain.doProcess(DefaultMessageProcessorChain.java:93)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.AbstractInterceptingMessageProcessorBase.processNext(AbstractInterceptingMessageProcessorBase.java:105)

at org.mule.processor.AsyncInterceptingMessageProcessor.process(AsyncInterceptingMessageProcessor.java:98)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.DefaultMessageProcessorChain.doProcess(DefaultMessageProcessorChain.java:93)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.AbstractInterceptingMessageProcessorBase.processNext(AbstractInterceptingMessageProcessorBase.java:105)

at org.mule.interceptor.AbstractEnvelopeInterceptor.process(AbstractEnvelopeInterceptor.java:55)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.AbstractInterceptingMessageProcessorBase.processNext(AbstractInterceptingMessageProcessorBase.java:105)

at org.mule.processor.AbstractFilteringMessageProcessor.process(AbstractFilteringMessageProcessor.java:44)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.AbstractInterceptingMessageProcessorBase.processNext(AbstractInterceptingMessageProcessorBase.java:105)

at org.mule.construct.AbstractPipeline$1.process(AbstractPipeline.java:102)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.DefaultMessageProcessorChain.doProcess(DefaultMessageProcessorChain.java:93)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.doProcess(InterceptingChainLifecycleWrapper.java:57)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.access$001(InterceptingChainLifecycleWrapper.java:29)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper$1.process(InterceptingChainLifecycleWrapper.java:90)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.process(InterceptingChainLifecycleWrapper.java:85)

at org.mule.construct.AbstractPipeline$3.process(AbstractPipeline.java:194)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.SimpleMessageProcessorChain.doProcess(SimpleMessageProcessorChain.java:47)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.doProcess(InterceptingChainLifecycleWrapper.java:57)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.access$001(InterceptingChainLifecycleWrapper.java:29)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper$1.process(InterceptingChainLifecycleWrapper.java:90)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.process(InterceptingChainLifecycleWrapper.java:85)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.SimpleMessageProcessorChain.doProcess(SimpleMessageProcessorChain.java:47)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.doProcess(InterceptingChainLifecycleWrapper.java:57)

at org.mule.processor.chain.AbstractMessageProcessorChain.process(AbstractMessageProcessorChain.java:66)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.access$001(InterceptingChainLifecycleWrapper.java:29)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper$1.process(InterceptingChainLifecycleWrapper.java:90)

at org.mule.execution.ExceptionToMessagingExceptionExecutionInterceptor.execute(ExceptionToMessagingExceptionExecutionInterceptor.java:27)

at org.mule.execution.MessageProcessorNotificationExecutionInterceptor.execute(MessageProcessorNotificationExecutionInterceptor.java:46)

at org.mule.execution.MessageProcessorExecutionTemplate.execute(MessageProcessorExecutionTemplate.java:43)

at org.mule.processor.chain.InterceptingChainLifecycleWrapper.process(InterceptingChainLifecycleWrapper.java:85)

at org.mule.transport.AbstractMessageReceiver.routeMessage(AbstractMessageReceiver.java:220)

at org.mule.transport.AbstractMessageReceiver.routeMessage(AbstractMessageReceiver.java:202)

at org.mule.transport.AbstractMessageReceiver.routeMessage(AbstractMessageReceiver.java:194)

at org.mule.transport.AbstractMessageReceiver.routeMessage(AbstractMessageReceiver.java:181)

at org.mule.transport.http.HttpMessageReceiver$HttpWorker$1.process(HttpMessageReceiver.java:311)

at org.mule.transport.http.HttpMessageReceiver$HttpWorker$1.process(HttpMessageReceiver.java:306)

at org.mule.execution.ExecuteCallbackInterceptor.execute(ExecuteCallbackInterceptor.java:20)

at org.mule.execution.HandleExceptionInterceptor.execute(HandleExceptionInterceptor.java:34)

at org.mule.execution.HandleExceptionInterceptor.execute(HandleExceptionInterceptor.java:18)

at org.mule.execution.BeginAndResolveTransactionInterceptor.execute(BeginAndResolveTransactionInterceptor.java:58)

at org.mule.execution.ResolvePreviousTransactionInterceptor.execute(ResolvePreviousTransactionInterceptor.java:48)

at org.mule.execution.SuspendXaTransactionInterceptor.execute(SuspendXaTransactionInterceptor.java:54)

at org.mule.execution.ValidateTransactionalStateInterceptor.execute(ValidateTransactionalStateInterceptor.java:44)

at org.mule.execution.IsolateCurrentTransactionInterceptor.execute(IsolateCurrentTransactionInterceptor.java:44)

at org.mule.execution.ExternalTransactionInterceptor.execute(ExternalTransactionInterceptor.java:52)

at org.mule.execution.RethrowExceptionInterceptor.execute(RethrowExceptionInterceptor.java:32)

at org.mule.execution.RethrowExceptionInterceptor.execute(RethrowExceptionInterceptor.java:17)

at org.mule.execution.TransactionalErrorHandlingExecutionTemplate.execute(TransactionalErrorHandlingExecutionTemplate.java:113)

at org.mule.execution.TransactionalErrorHandlingExecutionTemplate.execute(TransactionalErrorHandlingExecutionTemplate.java:34)

at org.mule.transport.http.HttpMessageReceiver$HttpWorker.doRequest(HttpMessageReceiver.java:305)

at org.mule.transport.http.HttpMessageReceiver$HttpWorker.processRequest(HttpMessageReceiver.java:251)

at org.mule.transport.http.HttpMessageReceiver$HttpWorker.run(HttpMessageReceiver.java:163)

at org.mule.work.WorkerContext.run(WorkerContext.java:311)

at java.util.concurrent.ThreadPoolExecutor$Worker.runTask(ThreadPoolExecutor.java:886)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:908)

at java.lang.Thread.run(Thread.java:680)

ERROR 2013-02-08 16:18:30,001 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.retry.notifiers.ConnectNotifier: Failed to connect/reconnect: Work Descriptor. Root Exception was: javax.naming.NameNotFoundException: [LDAP: error code 32 - No Such Object]; remaining name 'cn=ldapUser,ou=people,dc=example,dc=com'; resolved object com.sun.jndi.ldap.LdapCtx@c22b29a. Type: class javax.naming.NameNotFoundException

ERROR 2013-02-08 16:18:30,003 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.exception.CatchMessagingExceptionStrategy:

********************************************************************************

Message : Failed to invoke lookup. Message payload is of type: String

Code : MULE_ERROR--2

--------------------------------------------------------------------------------

Exception stack is:

1. javax.naming.NameNotFoundException: [LDAP: error code 32 - No Such Object]; remaining name 'cn=ldapUser,ou=people,dc=example,dc=com'; resolved object com.sun.jndi.ldap.LdapCtx@c22b29a (javax.naming.NameNotFoundException)

com.sun.jndi.ldap.LdapCtx:3092 (http://java.sun.com/j2ee/sdk_1.3/techdocs/api/javax/naming/NameNotFoundException.html)

2. [LDAP: error code 32 - No Such Object] (org.mule.module.ldap.ldap.api.NameNotFoundException)

sun.reflect.NativeConstructorAccessorImpl:-2 (http://www.mulesoft.org/docs/site/current3/apidocs/org/mule/module/ldap/ldap/api/NameNotFoundException.html)

3. Failed to invoke lookup. Message payload is of type: String (org.mule.api.MessagingException)

org.mule.module.ldap.processors.LookupMessageProcessor:141 (http://www.mulesoft.org/docs/site/current3/apidocs/org/mule/api/MessagingException.html)

--------------------------------------------------------------------------------

Root Exception stack trace:

javax.naming.NameNotFoundException: [LDAP: error code 32 - No Such Object]; remaining name 'cn=ldapUser,ou=people,dc=example,dc=com'

at com.sun.jndi.ldap.LdapCtx.mapErrorCode(LdapCtx.java:3092)

at com.sun.jndi.ldap.LdapCtx.processReturnCode(LdapCtx.java:3013)

at com.sun.jndi.ldap.LdapCtx.processReturnCode(LdapCtx.java:2820)

+ 3 more (set debug level logging or '-Dmule.verbose.exceptions=true' for everything)

********************************************************************************

INFO 2013-02-08 16:18:30,681 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.module.ldap.LDAPConnector: Added entry cn=ldapUser,ou=people,dc=example,dc=com

INFO 2013-02-08 16:18:30,690 [[ldap_test].connector.http.mule.default.receiver.02] org.mule.api.processor.LoggerMessageProcessor: dn: cn=ldapUser,ou=people,dc=example,dc=com

sn: foobar

cn: foobar

objectClass: person

Notice the NameNotFoundException block. This exception is handled by CatchExceptionStrategy and then the addition of LDAP is triggered.

Also we can verify this through a LDAP browser.

This is just an extremely simple example and hopefully one gets the ideas of how easy and powerful Mule is to integrate with LDAP with minimum efforts.